Designing a Scalable Portfolio System

Exploring AI-assisted workflows to structure, visualise and ship a full UX portfolio from scratch.

Problem

This site was created after the closure of Immerse, during a period where I needed to consolidate 8 years of immersive UX work into a cohesive portfolio. My goals were to:

Reconstruct case studies quickly but accurately from internal documentation

Build a CMS-backed site that could grow with future projects

I wanted to explore how Large Language Models (LLMs) could support structuring complex content and assist with repetitive workflows - not to replace my own writing, but to reduce bottlenecks in getting the main blocks in place without compromising accuracy. Then, after launching the initial version of the site, I prototyped a visually engaging, configurable UI component to better reflect my design values.

So overall, I used LLMs to support three overlapping aspects of the work: visual, structural, and technical.

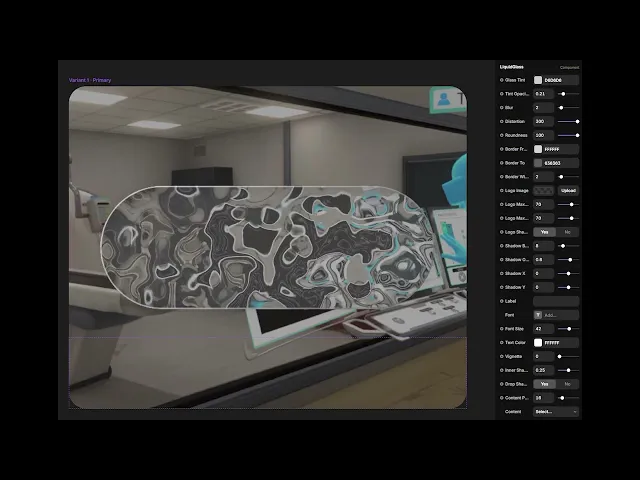

LiquidGlass UI Component

I wanted to elevate my portfolio design to make it more visually distinct and dynamic, an aspect used across all my case study thumbnails.

Inspired by Apple’s liquid glass UI and building on earlier "frosted glass" work from the Immerse App, I aimed to create a responsive, legible distortion-based glass effect in Framer. It posed an interesting challenge: not just in how AI could support the process, but also in exploring practical effects, like how the added distortion could reduce the amount of blur needed for legibility.

The goal was to implement an animated SVG-based glass layer with blur, distortion, and tint. It needed to be responsive, support white text overlays, and maintain performance. I found a community-shared project that got some things right and felt like a good starting point. I then used ChatGPT as a coding and debug partner throughout.

I used it as a very patient partner to help troubleshoot edge cases like rounded corner masking, and to prototype features I wanted to test. This included aspects such as edge stroke effects, border color, border opacity, vignette, and edge shading.

This version caused noticeable slowdown when used in multiple instances on a single page. So the version now on the site is a stripped-back build, refined to maintain performance.

Separately, I used ChatGPT to help debug implementation issues inside Framer, including problems with z-index stacking.

Archive System for Case Studies

I had over 15 immersive projects spanning 8 years, each with layered documentation: JIRA tickets, Figma files, UX diagrams, video walkthroughs, and more. To make sense of it all, I created an archiving framework and used ChatGPT to help organize and surface my own contributions, UX decisions, and measurable outcomes from each file.

All of this took place in a private ChatGPT Teams workspace to ensure security and control.

This wasn’t a one-click solution. I had to craft and refine targeted prompts for each project stage, ensuring they extracted only what was relevant. To achieve this, I did the following:

Designed and populated a folder structure organized by phase (Research, Design, Delivery, Outcomes)

Wrote targeted logging prompts instructing ChatGPT to extract facts only, without synthesis or assumptions, and refined these through trial and error

Manually processed each file, or batch of files, and saved the output into a document, creating a raw archive for each project

Documented the process to ensure it was repeatable and auditable

The result was a set of structured, traceable records I could mine for case study material. This was much faster and more complete than working purely from memory. It gave me an extremely detailed and accurate reminder of my involvement across projects, and allowed me to draft content I could refine and finalize with confidence. It also provides a great dataset I can query in the future.

I used the raw archive to generate rough outlines for each case study. I then rewrote each one to shape the final content.

Part of the workflow used to build a raw archive of past projects. Prompts were designed to extract facts, not generate content.

AI Assisted Design

Once the project case studies were completed in documents, I set about populating the CMS (in Framer). I noticed that plugins were available to export and import the CMS data, and I wondered if I could automate the process, even just to get the data in. I was learning Framer on the fly and needed to save time wherever I could. Using ChatGPT I built a lightweight Python script that parsed my .docx case study files and outputted CMS-ready CSV data.

This included field mapping for:

Title, Subtitle, Dates, Hardware, Role

Full project sections (Problem, Process, Outcome, Reflection)

It also handled formatting inconsistencies, such as HTML in text fields and shortened date formats. I went through a few CSV versions, adjusting the script and fixing formatting edge cases as I went. This cut down what would’ve been days of manual input, letting me populate the CMS with clean, structured case study data, ready for a proper editorial pass.

Outcome

A responsive, clean, CMS-powered portfolio, with 13 case studies, written from scratch and published within 4 weeks

A custom LiquidGlass UI component deployed across the site

An AI-supported archive system used to build case studies and align my CV

Reflection

This project felt like an impossible task at the beginning. Instead, it became a kind of design operations experiment, made up of content triage, systems thinking, design, and AI collaboration.

While AI helped streamline parts of the process, this was ultimately a design-led project, grounded in structure, clarity, and user experience. The tools didn’t write my site or invent my case studies. But they did:

Help me create effects that would’ve previously been harder to achieve

Enable me to debug code

Surface patterns I might have missed

Translate complex ideas into structured data

Let me work through a large backlog of project history with precision