AstraZeneca VR Training Modules

Developed the core design approach for three immersive training modules, each translating complex pharmaceutical procedures into detailed virtual counterparts. Modules included both single-player and multiplayer scenarios, depending on the workflow.

Role

VR UX Designer

Target Hardware

Meta Quest 2/3, WebGL

Industries

Pharmaceutical Training

Date

Jan 2021 - May 2022

Note: All visuals shown are from an indicative pharmaceutical demo I designed to reflect the AstraZeneca projects while maintaining confidentiality.

Problem

AstraZeneca needed to modernize training for its complex manufacturing processes. Traditional methods were time-intensive, with limited real-time feedback, and challenges in ensuring consistency across operator experiences.

We were tasked with designing immersive training modules in VR, not just to replicate SOPs, but to enable faster and more intuitive training, while still maintaining accuracy. Certain workflows relied on team coordination so the solution had to support solo and multi-user modes while staying grounded in real-world constraints.

My Role

I led UX design across multiple modules, responsible for everything from spatial mapping to interaction design and instructional flow. That included:

Conducting field research to understand tool handling, posture, and movement constraints

Translating paper SOPs into state-machine logic and interaction systems

Designing feedback-driven flows for both guided and unguided training modes

Writing VO scripts, guidance prompts, and error correction flows

Collaborating with engineering, QA, and stakeholders through each build cycle

Process

Research

We kicked off with an on-site study inside AstraZeneca’s manufacturing facility. I captured over 400 photos and videos to document tools, layouts, movement zones, and ergonomics. I recorded measurements and posture observations, as many actions required operators to rotate or reach within tight spatial margins. These needed to be accurately portrayed in a way that builds user muscle memory and supports transfer to real-world workflows.

Alongside this, I reviewed SOPs and training manuals, breaking down each process into granular interaction steps. Some sequences were deceptively complex, like using a tool to manipulate and divide powder, and many other interactions involving specialised tools.

Design and Prototyping

I mapped every interaction into a spreadsheet-based state machine, defining expected states, success conditions, and error handling. These flows drove everything from VO cue timing to controller mappings and system prompts. We modeled room layouts and teleport paths in Shapes to validate environmental layouts and ensure key interactions held up ergonomically.

I implemented a system of layered feedback - audio, visual, and haptic - that responded to common user mistakes like dropping items, moving too fast, or skipping steps. To support the different training modes of Learn, Practice, and Assessment, I designed branching logic to scale guidance appropriately.

Live UX reviews were baked into every milestone. I reviewed early builds with stakeholders and QA in headset, flagging misaligned interaction zones, mistimed VO cues, or unclear visual elements, adjusting the flow as needed.

Implementation

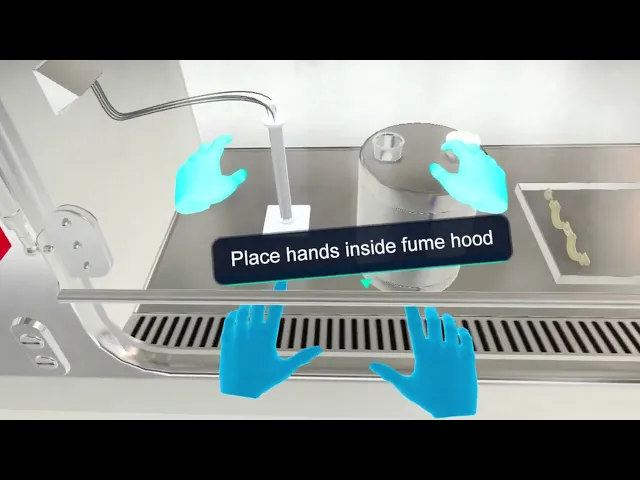

For each module, I delivered annotated scripts, timing spreadsheets, and VO line references tied directly to interaction triggers. In multi-user modules, I designed coordination systems such as ghosted hands and object pass mechanics - allowing one user to guide another or hand off tools mid-procedure. This meant working closely with engineers to sync user states, animations, and roles.

Solution

Module 1 & 2:

Solo-mode VR experiences focused on precise interaction sequences, visual/audio cues, and detailed feedback structures. Included beginner, learning, and practice modes.

Module 3:

A single or two-player collaborative VR simulation that supported real-time coordination. Features included synchronized object passing, dual VO flows, and error-trigger resets.

Interface Features:

Contextual onboarding

Snap-to-target guides, contextual highlights, hand tracking constraints, hand movement speed tracking

VO-driven progression gates and error correction

Tracked user actions (e.g., dropped items, speed errors), providing corrective guidance through haptics, voice-overs, and visual cues to correct mistakes and ensure accuracy.

Training architecture for simultaneous WebGL and VR headset users, with support for single and multi-user VR modes.

Outcome

Measured Results:

50% reduction in training duration compared to traditional SOP-led methods

52% faster achievement of operator competency

Deployed across AstraZeneca’s internal VR training programs with ongoing influence on subsequent modules

The final build passed QA across both solo and multi-user modes, with full implementation of interaction flow logic, VO scripting, and interaction design. Internal teams gave strong feedback on accuracy, polish, and usability.

Reflection

What Worked Well:

Close integration of field research with design documentation ensured real-world fidelity.

Cross-functional collaboration was key to accurate implementation of complex state logic.

Branching flows and feedback systems enhanced adaptability for different trainee levels.

Challenges:

Hand-tracking precision and VO cue alignment required repeated iteration.

Multiplayer syncing in module 5 introduced unique technical and UX hurdles.

Iterative QA revealed nuanced interaction bugs tied to controller behavior and spatial occlusion.