Ford VR Authoring

Originated within the HSSMI research partnership with Ford. Evolved from CAD import tests into a full authoring proof-of-concept built with the Immerse SDK.

Role

VR UX Designer / Producer

Target Hardware

Oculus Rift

Industries

Automotive Manufacturing / Industrial Design

Date

Jun 2018 - Mar 2020 (on and off development)

Problem

Ford was investigating ways to scale VR training across its global operations without relying on developers for every new piece of content. We prototyped a bespoke authoring tool that would allow non-technical subject matter experts (SMEs) to create, edit, and deploy interactive training scenarios directly in VR. The tool needed to be intuitive enough for trainers to use without coding skills, yet robust enough to handle nuanced and realistic interactions, with intuitive sequencing.

The core challenge was designing a UI that simplified complex logic creation. We had to translate abstract concepts, triggers, conditions, and interaction sequences, into a tactile spatial interface that felt natural to use.

My Role

I served as the Lead UX Designer, owning the product’s design strategy from concept to delivery.

Product Strategy: Defined the feature set and user flows for the authoring system, balancing flexibility with usability.

Interaction Design: Designed the spatial interface for the 'Action/Trigger/Condition' logic system, ensuring users could visualize and manipulate training rules easily.

Prototyping: Created wireframes and interactive spatial prototypes to test the system before development.

User Testing: Ran usability sessions with Ford’s training teams to validate the tool’s intuitiveness and iterate on the UI.

Project Management: Scoped and managed JIRA tickets, Stand ups, and QA sessions.

Process

Research & Design

Designed the full platform flow from authoring to playback and data reporting. Scoped MVP for early deployment, later informing Immerse SDK guide functionality.

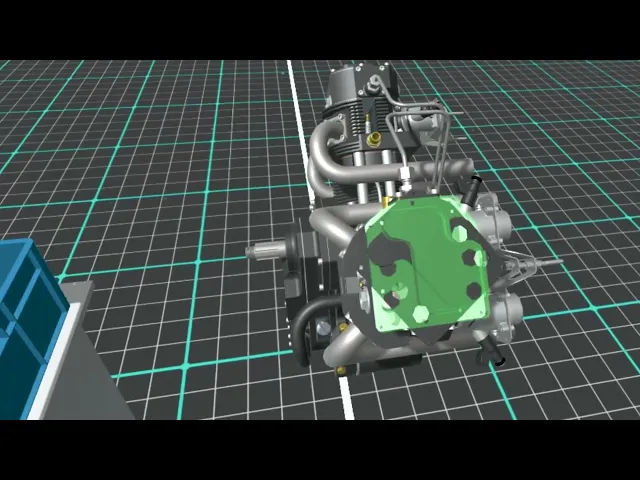

Participated in early CAD-to-Unity feasibility tests; identified model and scaling inconsistencies. Used internal staff for frequent in-VR testing and iterative UX refinement, testing and iterating on early VR interface designs within Unity.

I mapped out the training scenarios Ford wanted to model first. We considered the triggers and conditions that accompanied the required user actions, forming the core UX. Another core UX aspect was the assumption that the CAD models would start in a 'completed' state (in their final form).

Designing the Spatial UI

Early menus prohibited ease-of-use when setting up the sequences in VR. I iterated to design a contextual menu system that attached to objects, giving the user quick access to tools to enable them to do things like record paths and orientations. This removed the sequencing logic from this step and reduced the initial work required by the user to begin. For path setting logic, I used a nodal system where authors could physically draw lines between objects, and a freehand system for more nuanced interactions.

Solution

We delivered a functional Proof-of-Concept (POC) on the HTC Vive that allowed non-technical teams to build and validate training content.

Haptic Guidance System

I designed a haptic feedback system to make the assembly tasks feel physical. This included an 'engagement point' along the movement path. Passing this point when moving the object along the path would allow the user to feel a low haptic rumble that increased in intensity, confirming the fit before the final snap.

Instant Feedback Loop

To let authors test their work instantly, I designed a 'Play Mode' which allowed them to toggle seamlessly between Editing/Recording (placing triggers, setting rules) and Playing (experiencing the training as a student). This feedback loop allowed users to debug their own scenarios instantly without leaving the area they were working on.

Final Interaction System

The final system featured an in-VR contextual UI for manipulating model parts and a path recording tool that supported both linear and freehand movement with immediate preview. We also mocked up future capabilities, including customizable haptic zones (rumble vs. click) and step-editable VO guidance with a scrubbable timeline, demonstrating the tool's long-term potential.

Outcome

Functional Delivery: We delivered a working proof of concept deployed in Unity, supported by full UX flow diagrams and context menu specifications. I also scripted and narrated the concept video used for internal alignment and executive presentation (above).

Strategic Impact: The project earned executive endorsement and contributed to a Ford 3-year VR roadmap (though paused due to COVID-19).

Engineering Wins: Multiple features were successfully implemented based on my UX specs: path definition, previews, animated snap targets, and complex haptics. Crucially, this authored UX directly influenced the creation of the 'Guides' system in the Immerse SDK (Lines, Points, and Planes for spatial alignment), becoming a standard tool used in many projects.