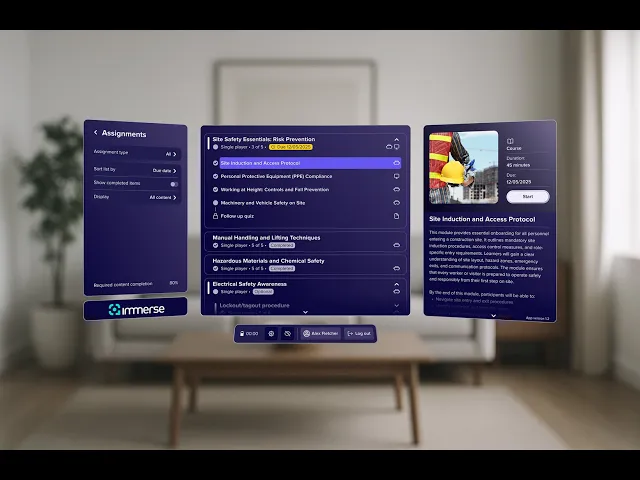

Immerse App

Developed as a fork from the Meta Training Hub, the Immerse App evolved into a commercial VR LMS product with real-world user deployment and integration with backend administration control.

Role

VR UX Designer

Target Hardware

Meta Quest 2/3, Pico Neo3/4

Industries

Education Technology / VR Learning Platforms

Date

Oct 2022 - Jun 2025

Problem

The Immerse App began as an offshoot of the work done for the Meta VR Training Hub. We set out to build something future-facing and flexible enough to serve as a general-purpose VR training LMS for clients across sectors.

At the core of the problem, most existing immersive systems didn’t scale or support much in the way of content hierarchies and reporting. Clients also needed a consistent way to theme the app to their brand. One key client had also expressed a strong interest in real-time multi-user collaboration for arranging scenes in VR.

My Role

I led the design for the VR frontend of the LMS. My work spanned:

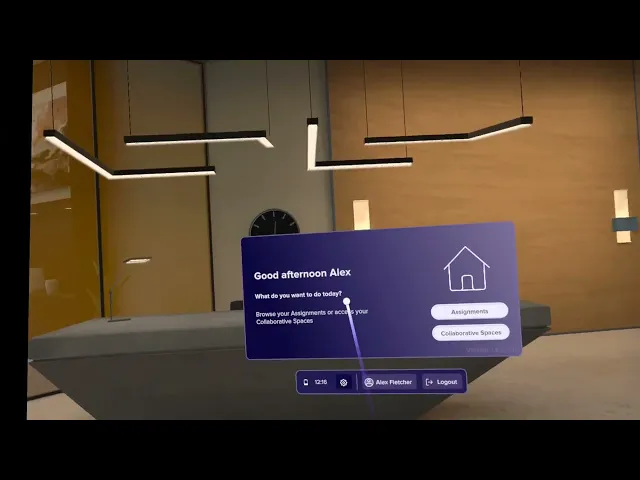

Designing spatial UI layouts and panel behaviors that felt intuitive and comfortable in VR

Prototyping and validating interaction flows using ShapesXR to catch ergonomic or clarity issues early

Defining the logic for the theming system in Figma, then collaborating with developers to implement it in Unity via structured JSON

Scoping and iterating on all features, from collaborative scene editing and passthrough mode to UI tracking, session browsing, and general ergonomics

Working closely with engineers, PMs, and fellow designers to align the in-headset experience with backend logic and Admin-facing workflows