QinetiQ

Developed in partnership with the Royal Navy under the iMAST defense consortium, this project focused on designing and delivering realistic procedural simulations for mission-critical operations.

Role

VR UX Designer / Producer

Target Hardware

PCVR (HTC Vive / Vive Pro)

Industries

Defense / Military Training

Date

Dec 2017 - Jul 2018

Note: The content shown has been selectively edited for clarity and confidentiality. The flow chart is a partial, modified version created for illustrative purposes. No classified material is presented.

Problem

Manual training assessment needed more scalable, data-driven tools.

Submarine operational training had classified constraints and realism limits.

High-stakes training demanded realistic interaction models without compromising usability or safety.

My Role

Scrum Master and VR UX Designer.

Team Lead: Facilitated and ran the project using the Scrum methodology, scoping and structuring sprint cadences, managing tickets in JIRA, and leading daily stand-ups.

Design Lead: Sole author of the design documents, interaction flows, and spatial logic.

Stakeholder Liaison: Acted as primary interface with QinetiQ and Royal Navy, managing scope, expectations, and demos.

Process

Research & Discovery:

Analysed training videos, physical procedures, and spatial constraints.

Modeled emergency firing flows and submarine escape logic.

Design & Interaction:

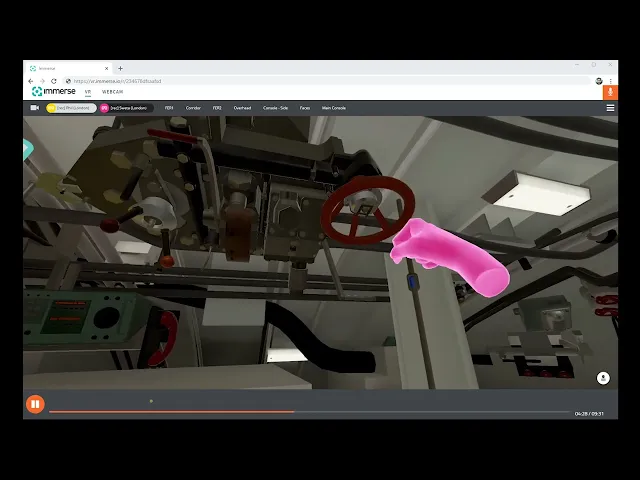

Created modular, federated XR flow: two users in VR, one observer via WebGL.

Mapped real-world procedures to intuitive VR actions (e.g., torch inspections, valve rotations).

Designed error feedback (visual/auditory) and gamified success metrics.

Technical Collaboration:

Worked with SDK and Unity developers, QA, and artists.

Designed and tested custom teleport mechanics and VOIP-based comms panels.

PM & Sprint Management:

Led team through every sprint.

Held stakeholder reviews, managed project risks and the backlog priority.

Solution

Federated VR Experience:

Two fully linked VR zones: Escape Compartment and Ship Control Room.

Enabled cross-compartment VOIP and real-time interaction coordination.

Interaction Design:

Over 14 custom interactables (screwdrivers, valves, torches, etc.) all operable and designed with realism.

Delivered fully narrated procedural walkthroughs for client.

Gamified Reporting Tools:

Defined reporting criteria for monitoring trainee performance (session duration, errors, etc).

Cross-Platform Compatibility:

Real-time WebGL observer view allowed for recording and post-training feedback.

Outcome

Delivery

Full system delivered on time, showcased at the Farnborough event

Stakeholder Response

QinetiQ and Royal Navy praised immersive realism and modular structure

Broader Impact

Introduced gamification and real-time feedback into military VR training

Enabled remote collaboration and post-session review within a secure VR environment

Immerse SDK improved through teleportation UX and analytics enhancements

Reflection

Challenges:

Balancing realism and procedural integrity while working under classified restrictions.

Coordinating multi-stakeholder input while keeping sprints on track.

What I’m Proud Of:

Owning the full UX flow from documentation through to delivery.

Building an engaging, data-rich procedural training environment.

Presenting work through narrative-led demos that resonated with non-technical stakeholders.

Learning & Relevance:

Gained experience in federated user flows, spatial audio design, and precision input with tool-based interactions.

Reinforced the value of user-first design even in high-stakes, constraint-heavy projects.