Yale & 2U Cardiac Exam VR Pilot

Commissioned by 2U and Yale School of Medicine, the pilot addressed the need for flexible, accessible, and high-fidelity medical training tools that reinforce classroom and hospital-based instruction.

Role

VR UX Designer / Producer

Target Hardware

Meta Quest 2

Industries

Medical Education

Date

Jul 2022 - Oct 2023

Problem

Medical students in Yale’s Physician Assistant Online Program had limited opportunities to practice comprehensive cardiac exams before interacting with real patients. Existing remote learning tools, like 2D videos or static mannequins, lacked the necessary realism or interactive fidelity.

The client required a high-fidelity VR simulation that included voiceover, a scoring system, and multiplayer capabilities, all within a tight timeline. Midway through the project, leadership disruptions and unclear sprint priorities further destabilized development, threatening the delivery schedule.

My Role

I served as the primary UX Designer while also stepping in as Interim Producer to stabilize the project during a critical period.

UX Designer Responsibilities:

Led design in collaboration with engineers, QA, and Yale’s Program Director. Facilitated stakeholder feedback loops to align clinical realism and educational goals.

Defined user flows, hand interactions, audio/haptic feedback systems, and UI logic using ShapesXR.

Authored and prototyped immersive interactions (e.g., palpation, auscultation, patient dialogue).

Developed original features designed for enhanced spatial learning, including nuanced proximity and snap-target interactions for palpation and auscultation.

Interim Producer Responsibilities:

Assumed PM duties during project instability.

Led standups, audited the backlog, scheduled sprints, and renegotiated scope with 2U and Yale.

Stabilized the team, hit critical milestones, and maintained client trust through transparent communication.

Process

Clinical Groundwork & Prototyping

We anchored the design in clinical rigor. I consulted directly with a Subject Matter Expert (SME) at Yale to deconstruct the entire exam flow, across four examination stages leading to the final diagnosis. I mapped these steps into a detailed user journey and prototyped the entire spatial layout and UI in ShapesXR. This early visualization allowed us to validate patient-tablet placements and proximity triggers before writing code, significantly reducing rework later in development.

Designing for Touch and Sound

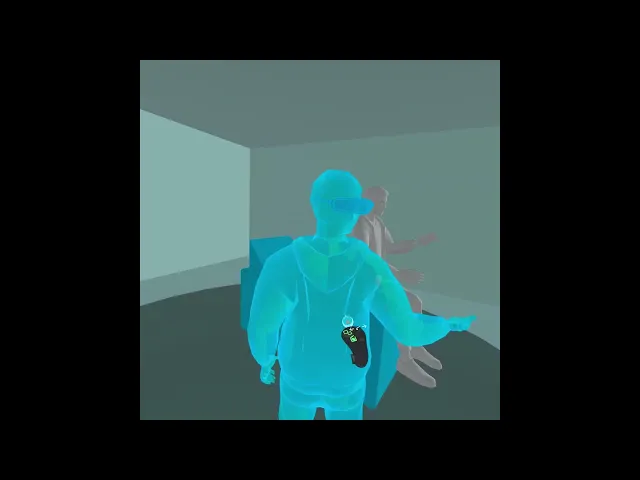

We simulated 'palpation' (feeling for physical signs) and 'auscultation' (listening). I worked with engineers to refine avatar hand poses and implement haptic feedback that triggered when users correctly positioned their hands on the patient's chest. For auscultation, we designed a system where heart sounds, such as murmurs or gallops, would become increasingly audible only when the stethoscope was placed in the precise anatomical zones. Both of these interactions used our SDK's point guide system to subtly guide avatar hands within a limited proximity zone.

Stabilizing Production

Midway through, the project faced instability due to leadership changes. I stepped in to restructure the sprint plans and introduced velocity tracking. By clarifying the backlog and defining a phased delivery model (Learn, Practice, Assessment modes), I helped the team recover momentum and restore client confidence.

Multimodal Interface Design

To support the complex workflow, I designed a multimodal interface that combined tablet-based menus for patient history with floating prompts for real-time guidance. We also implemented a 'patient dialogue' system, allowing students to instruct the virtual patient to perform various actions/poses.

Solution

We delivered a modular VR training simulation on the Meta Quest 2 with three distinct modes:

Learn Mode: Step-by-step guided training with instructional voiceovers and anatomical overlays.

Practice Mode: Semi-guided exploration with scoring hints and corrective feedback.

Assessment Mode: Unguided diagnostic challenge with live scoring and patient condition variability.

Key Features:

Multiple patient avatars with variable pathologies.

Interaction fidelity: hand gestures mapped to palpation zones and specific controller gestures, snap/feedback logic, and tool use.

Clinical fidelity: dynamic auscultation with spatialized heart sounds and context-sensitive audio cues.

Outcome

Delivery Success

Despite the mid-project disruption, we delivered a fully functioning pilot with all core features, high clinical realism, scoring, voiceover, and multiplayer - all implemented without any major design rework.

Recognition

Developer feedback cited UX mapping as 'highly efficient and implementation-ready.' The senior developer on the team credited me for 'salvaging' the project.

Design alignment

Successfully aligned with Yale Medical School stakeholders on the design vision, ensuring clear interface flow and patient logic.

Reflection

What Went Well:

Spatial prototyping (in ShapesXR) eliminated rework, nearly all elements went to final without major redesign.

The flexibility to move between roles (Designer/PM) kept the project on track. This demanded diplomacy, leadership, and rapid triage of incomplete tasks and blockers.

The project showcased VR’s fuller potential for clinical education, embodying the physical skill required to perform the examinations.

Key Takeaway

VR UX design requires not only user-centric thinking but also spatial, embodied logic and cross-sensory integration.